由用户创建的信息 Blend4Life

21 July 2020 20:57

BUMP.

Note: If you post here on the B4W forum, your post will be held in moderation mode forever (unless you have an old, "trusted" account) and nobody can reply to your questions. Devs and moderators are gone and the forum is deserted. You can't get help here.

For now, we're on the discord channel: https://discord.gg/T5ydDy7

Note: If you post here on the B4W forum, your post will be held in moderation mode forever (unless you have an old, "trusted" account) and nobody can reply to your questions. Devs and moderators are gone and the forum is deserted. You can't get help here.

For now, we're on the discord channel: https://discord.gg/T5ydDy7

02 July 2020 20:58

Hey rossFranks, I just saw this:

https://demo.pointcloud2cad.de/wp-content/uploads/verge3d/61/ship.html

(Click on "CLIPPING" and try the sliders.)

Made with Verge3D though.

https://demo.pointcloud2cad.de/wp-content/uploads/verge3d/61/ship.html

(Click on "CLIPPING" and try the sliders.)

Made with Verge3D though.

18 March 2020 19:25

Reply to post of user aimanYes, you upload the image with filereader and then use replace_image() to put that image on the model.

Hello. Is it possible that we can have the user input the image via Html and that image gets mapped on the 3D model?

09 March 2020 10:13

08 March 2020 19:44

Well, I don't do VR and I'm a UE outsider, so maybe I shouldn't have replied to begin with!

My method for getting more quality into scenes is baking with Cycles. I also like using additional specular-only lamps to make the geometry and the normals come out more and reduce the flatness/dullness of the scene. This is effective for indoor scenes, and I can get a result much closer to Cycles. Without these "enhancements", I get pretty much the same result in B4W that I already see in the Blender viewport in B4W mode (= Blender Internal mode) with material shading on, and that is of course very poor compared to Cycles or desktop UE.

My method for getting more quality into scenes is baking with Cycles. I also like using additional specular-only lamps to make the geometry and the normals come out more and reduce the flatness/dullness of the scene. This is effective for indoor scenes, and I can get a result much closer to Cycles. Without these "enhancements", I get pretty much the same result in B4W that I already see in the Blender viewport in B4W mode (= Blender Internal mode) with material shading on, and that is of course very poor compared to Cycles or desktop UE.

06 March 2020 22:56

06 March 2020 22:53

Wow, are you telling me there is a way to transfer THAT result to real-time B4W? I'd love to get that kind of quality, the best I can get is through baking with Cycles and applying that to B4W, so that's my "high-end" workflow. I could never get that depth of sharpness, sharp edges, model detail… nothing in B4W looks as crisp as pre-rendered images or UE4, but I believe that's a limitation of the engine and WebGL itself rather than my inaptitude.

There's a B4W FAQ somewhere which says the quality of WebGL in general is clearly limited compared to non-real-time or desktop/console engines.

There's a B4W FAQ somewhere which says the quality of WebGL in general is clearly limited compared to non-real-time or desktop/console engines.

05 February 2020 10:43

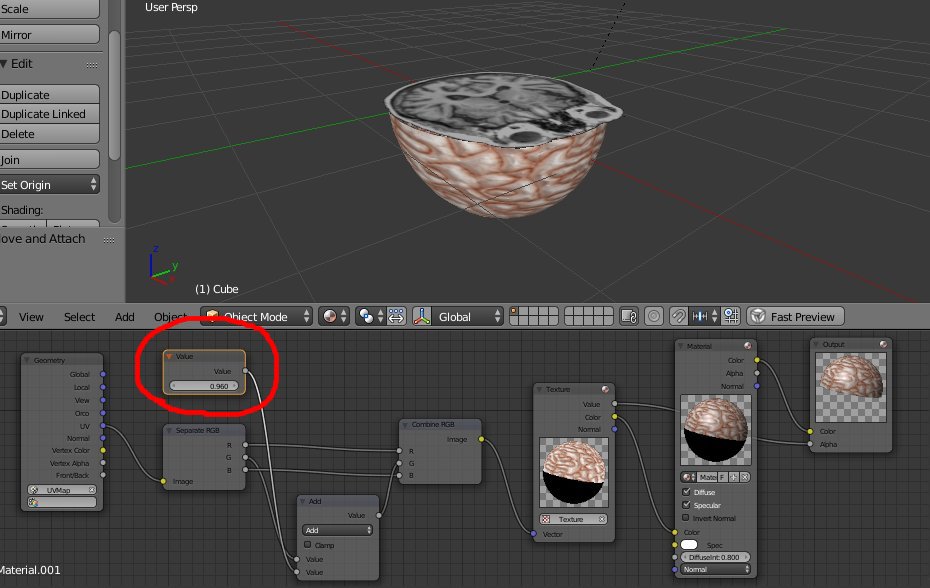

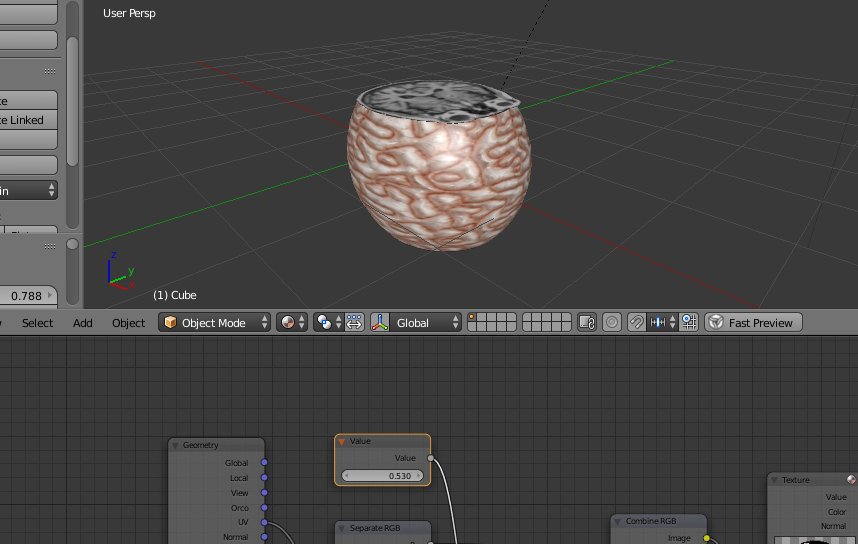

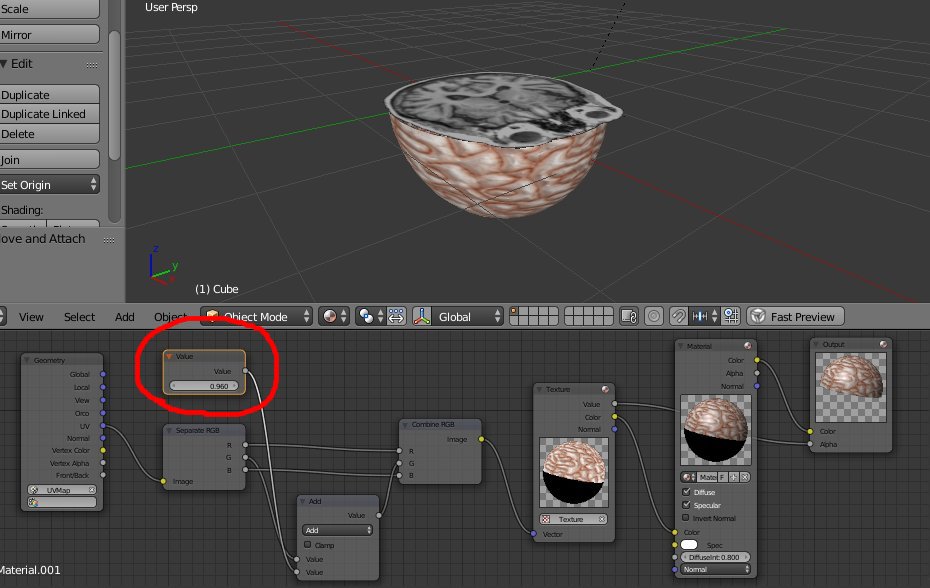

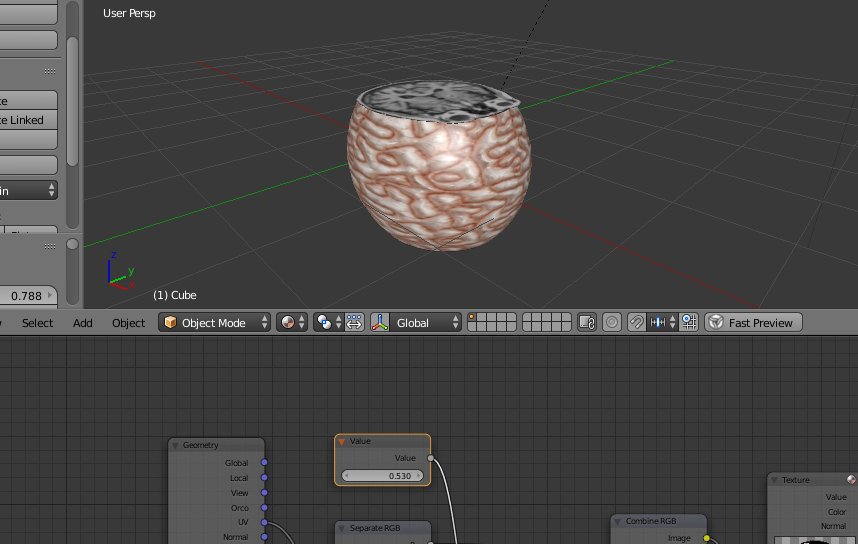

There is a simple trick that I think comes pretty close to what you want to achieve, without messing around with any geometry, and that is to animate the UV map on the brain model. Basically, you make a texture for the brain model and then blank out half of it (transparency). Then animate the UV map so that the cross section moves up or down across the brain. Add the slice (the plane with the brain-scan image) and move it in unison with the cross section.

You also need to switch the correct slice with m_tex.replace_image (not happening in the screen shots).

You can animate in the other axes by using 90° rotated models. The model (or rather the UV unwrap) must consist of precisely horizontal rows of polygons, otherwise you won't get a flat cross section.

You also need to switch the correct slice with m_tex.replace_image (not happening in the screen shots).

You can animate in the other axes by using 90° rotated models. The model (or rather the UV unwrap) must consist of precisely horizontal rows of polygons, otherwise you won't get a flat cross section.

04 February 2020 10:29

03 February 2020 22:42

Devs say dynamic modifiers are not supported except for armatures, so you can't use it.

Maybe you could simply apply the modifier for each frame, i.e. create many "sliced" copies of the Sphere along the cube's X axis and then animate them with show/hide as the cube goes through. Of course that's a large increase in geometry…

Maybe you could simply apply the modifier for each frame, i.e. create many "sliced" copies of the Sphere along the cube's X axis and then animate them with show/hide as the cube goes through. Of course that's a large increase in geometry…